in my previous post titled opalis: monitoring grey (gray) agents in opsmgr I talked about opalis handling multiple objects down the pipeline as being interesting. in this post, I'll go into some detail on the typical behavior and how to get around it.

next, next, next, finish

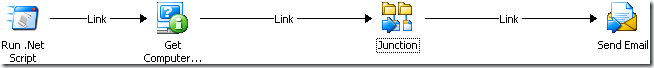

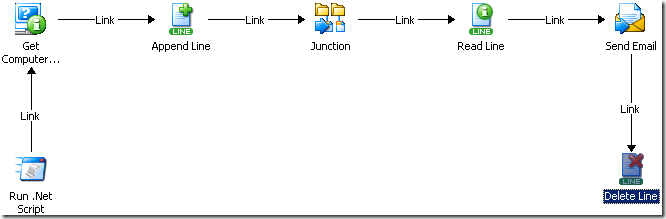

I guess we should start off with a picture. we'll use the grey agent scenario...

well, this certainly looks familiar. to elaborate, if we were sending one object down the path, this would work perfectly since a single object stemming from "run .net script" would pass through each object once. assuming we had three grey agents, the three would hit "get computer/ip status" and loop three times, once for each object. you know all this. once you get to "send email", three emails would fly out!

introducing the junction object

ideally, we want the names of each computer to go into some kind of collection and drop the entire collection into the email body, right? if it works correctly, the recipient would get one email with a list of computers that could be pinged. this is where junction comes in.

the junction object is basically used to wait for different branches to all complete before moving forward to the next object. junctions can also republish data if so desired from one of the previous branches (yes, just one) to be used by objects down the pipeline. however, junctions can also be used to kind of remove the looping iterations that occur with multiple objects. the help file states the following:

Note: You can choose “None” to propagate no data from any of the branches previous to the Junction object. In this scenario the object following the Junction will run once, regardless of the data provided in previous objects.

if we did exactly as I illustrated above, you wouldn't get the desired effect you were hoping for. if you grabbed the published data from "get computer/ip status" to use in the email body, you'd end up with multiple emails going out, as in the previous scenario. if you tried to get it from junction, it would fail since the "none" property is used for the "republish data from" dialog. while we have achieved what we wanted to do, which is to cause a run once behavior, we don't have the information from "get computer/ip status" in a helpful format.

text file management objects

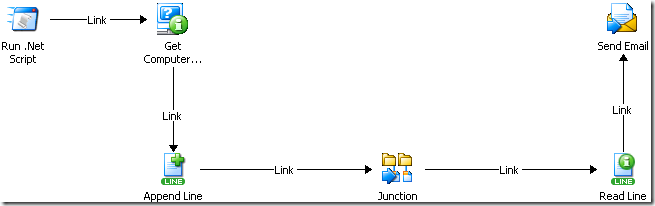

to correct that, we'll use the text file management objects to write out data before the junction and read in data after the junction.

this works successfully! however, the next time this runs, we would end up appending data to the same text file, which would basically send out old data on top of old data each time it ran. that's not the scenario we want. we're almost there though.

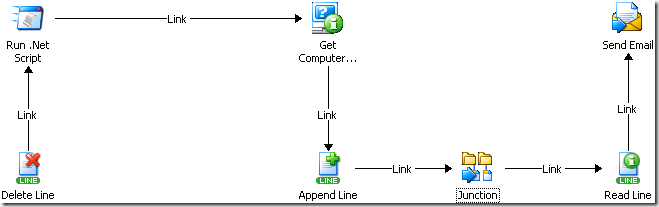

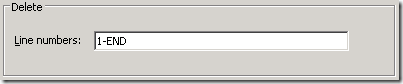

with delete line, we can easily remove all the content of the file we use to hold the computer names to clear it out at the beginning of each use. however, there could be one slight problem with this. can you tell what it is?

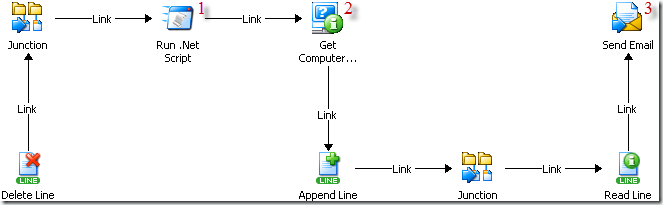

if we left "delete line" to run with its default behavior, the three grey agent names would cause a loop to occur three times at the next object, which is "run .net script". as you can imagine, you'd end up with quite a bit more cycles than originally anticipated. to stop this behavior from occurring, you would simply change the run behavior to flatten the object on the way out. you could effectively do the same thing by using -- yes! -- a junction as illustrated here:

and now finally, we have a working policy!

to be fair, you could have also moved the delete line object to the tail end of the policy after "send email" to have the file cleansed out. this doesn't seem as desirable in my opinion since it could fail at any point after "append line" causing stale information to stale residual during the next execution. here's how that would look though:

you can't argue with simplicity though since this process doesn't require using a flatten behavior on "delete line" or a junction after "delete line". anyway, let's drop into the details for a minute to look at the text file management objects we're using and the run behaviors.

inspecting the details

looking back at one of the final policies, we'll use the one with a single junction so that different points can be illustrated.

![image[31] image[31]](http://lh5.ggpht.com/_QUcVXWX86jg/TIsJeLyBHGI/AAAAAAAAAVw/q4OwUOV65AQ/image%5B31%5D%5B2%5D.png?imgmax=800)

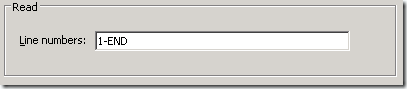

let's take it from the very top so that we don't lose any detail. to begin with, we start with "delete line". as you'll recall, in order to cleanse the file we're using to retain computer names during the workflow, the file must be emptied out before use. this is very simply done by specifying 1-END in the "line numbers" field.

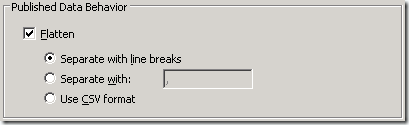

under the "run behavior" section, specify "flatten" as the "published data behavior".

we have now effectively stopped "delete file" from passing multiple values down the pipeline. only one iteration is passed to "run .net script". just as a reminder, you can achieve the same thing using junction with "none".

entering into the "run .net script" object, the powershell script is executed returning zero or more objects to the pipeline. each of these objects is passed on to the "get computer/ip status" object. this is exactly the behavior we want. if we tried to flatten it, they wouldn't be individually processed. it wouldn't do much good if we had more than one object. (by the way, I didn't write this policy to handle a zero count return from the powershell script. :o)

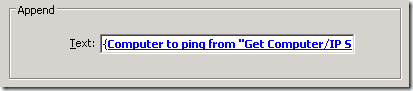

now of course, as we talked about before, trying to pass multiple objects to "send email" would cause multiple emails to generate. to stop this from happening, we pass the multiple objects into a junction to force only one iteration -- like a shower spout hitting a funnel and coming out as a single stream. in doing so, we lose distinction in each object that we were looping through. to capture that data, you can push the information to a text file, which is exactly what is illustrated.

now we have the information from each distinct object pushed into a text file. moving to junction will flatten the iterations down to one and move down the path into the "read line" object. in order to read all the lines in the text file, we simply use the same formula we used from "delete line" -- "1-END".

assuming there were multiple objects going into the text file, would mean that there would be multiple objects coming back out of the text file when read. this would cause a multiple iteration loop to occur again. to change this behavior, modify the "published data behavior" to "flatten". since we want the information from each line of the text file to not be on a single long line, choose the "separate with line breaks" option.

with the text file data flattened, it can be used in the body of the email message to produce a list of machines instead of an email per machine. here's an example of how the "send email" properties were set up:

and here's how it looks coming out:

I sincerely hope that wasn't too confusing. I find with opalis it's often more difficult to explain a concept than to understand a concept. if you have any questions, feel free to drop me a line. you know where to reach me.

Comments

Post a Comment